Best Tools for Data Conversion to Buy in March 2026

Clockwise Tools IP54 Grade Digital Caliper, DCLR-0605 0-6" /150mm, Inch/Metric/Fractions Conversion, Stainless Steel, Large LCD Screen

- IP54 RATING: WATER & DUST RESISTANT, PERFECT FOR ANY WORK ENVIRONMENT.

- HIGH PRECISION: MEASURE ACCURATELY WITH ±0.001 PRECISION & LARGE DISPLAY.

- DURABLE DESIGN: PREMIUM STAINLESS STEEL ENSURES LONG-LASTING, ACCURATE USE.

Dougrtha Multifunctional Data Cable Conversion Head Portable Storage Box, Multi-Type Charging Line Convertor USB Type C Adapter Tool Contains Sim Card Slot Tray Eject Pin, Phone Holder (Black)

-

VERSATILE CHARGING: FOUR PORTS FOR SEAMLESS CHARGING AND DATA TRANSFER.

-

TANGLE-FREE STORAGE: COMPACT CASE KEEPS CABLES ORGANIZED AND PROTECTED.

-

DURABLE & FAST: HIGH-QUALITY MATERIALS ENSURE SPEED AND LONGEVITY.

Multi USB Charging Adapter Cable Kit, USB C to Ligh-ting Adapter Box, Conversion Set USB A Type C Lightn-ing Micro Adapter Kit,60W Charging and Data Transfer Cable Kit Sim Tray Eject Tool Slots

- VERSATILE CHARGING FOR ALL DEVICES: CONVENIENT MULTI-ADAPTER KIT.

- LIGHTNING-FAST DATA TRANSFER: UP TO 480MBPS WITH MULTIPLE OPTIONS.

- COMPACT & PORTABLE DESIGN: PERFECT FOR TRAVEL AND EVERYDAY USE!

Calculated Industries 4095 Pipe Trades Pro Advanced Pipe Layout and Design Math Calculator Tool for Pipefitters, Steamfitters, Sprinklerfitters and Welders | Built-in Pipe Data for 7 Materials , White

- INSTANT ANSWERS FOR PIPE DESIGN CHALLENGES: STREAMLINE YOUR BUILDS EFFORTLESSLY.

- BUILT-IN PIPE DATA FOR QUICK CALCULATIONS: SAVE TIME WITH ACCURATE MEASUREMENTS.

- DURABLE, ALL-IN-ONE CALCULATOR FOR EVERY JOB: ENHANCE EFFICIENCY ON-SITE AND OFF.

Calculated Industries 8030 ConversionCalc Plus Ultimate Professional Conversion Calculator Tool for Health Care Workers, Scientists, Pharmacists, Nutritionists, Lab Techs, Engineers and Importers

- CONVERT 70+ UNITS EFFORTLESSLY WITH SIMPLE INPUT AND SETTINGS.

- ACCESS 500+ COMBINATIONS WITHOUT NEEDING COMPLEX CALCULATORS.

- IMPROVE ACCURACY AND SAVE TIME ON CALCULATIONS WITH EASE!

Clockwise Tools Digital Indicator, DIGR-0105 0-1 Inch/25.4mm, Inch/Metric Conversion, Auto Off

-

LARGE LCD WITH DUAL UNIT SUPPORT – EASY READINGS IN INCH/METRIC FORMATS.

-

HIGH PRECISION MEASUREMENTS – ACHIEVE ACCURACY OF ±0.001 FOR RELIABLE RESULTS.

-

VERSATILE FOR VARIOUS TASKS – PERFECT FOR LATHE CALIBRATION AND PRECISE ALIGNMENT.

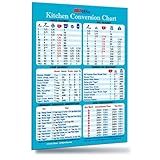

Must-Have Kitchen Conversion Chart Magnet 50% More Data Exclusive Common Cup Measurement Bonus Ingredient Substitution Food Calories Cooking Baking Measuring Guide Recipe Cookbook Accessories Gift

- HANDS-FREE REFERENCE GUIDE SAVES TIME AND KEEPS YOUR KITCHEN TIDY!

- 50% MORE DATA, PLUS BONUS CALORIE INFO AND SUBSTITUTIONS INCLUDED!

- BEAUTIFUL DESIGN ENSURES EASY READING FROM ANY DISTANCE IN THE KITCHEN.

To convert a pandas dataframe to TensorFlow data, you can use the tf.data.Dataset.from_tensor_slices() function. This function takes a pandas dataframe as input and converts it into a TensorFlow dataset that can be used for training machine learning models. Once you have converted the dataframe to a TensorFlow dataset, you can use it to train your model using TensorFlow's machine learning APIs. This allows you to take advantage of the powerful machine learning capabilities of TensorFlow while still being able to work with data in the familiar pandas format.

How to split a pandas dataframe into training and testing sets for tensorflow conversion?

You can split a pandas DataFrame into training and testing sets by using the train_test_split function from scikit-learn. Here's an example of how to do this:

import pandas as pd from sklearn.model_selection import train_test_split

Load your dataframe

df = pd.read_csv('data.csv')

Split the data into features and target variable

X = df.drop('target_column', axis=1) y = df['target_column']

Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Convert the data to numpy arrays for tensorflow

X_train = X_train.values X_test = X_test.values y_train = y_train.values y_test = y_test.values

Now, you have successfully split your pandas DataFrame into training and testing sets which are ready to be converted into Tensorflow data format.

What is the significance of creating custom functions for data preprocessing in tensorflow conversion?

Creating custom functions for data preprocessing in Tensorflow conversion can provide several benefits:

- Efficiency: Custom functions can be optimized for your specific data and preprocessing requirements, leading to faster and more efficient data conversion.

- Flexibility: Custom functions allow you to control the data preprocessing steps and customize them according to your needs, enabling you to handle complex data transformations easily.

- Reusability: With custom functions, you can encapsulate complex preprocessing steps into reusable modules that can be easily shared and used across different projects.

- Maintainability: Custom functions make it easier to manage and maintain your data preprocessing code, as you can encapsulate preprocessing logic in separate functions and modules for better organization.

In summary, creating custom functions for data preprocessing in Tensorflow conversion can help improve the efficiency, flexibility, reusability, and maintainability of your data processing workflow.

How to handle outliers in a pandas dataframe before converting to tensorflow data?

To handle outliers in a pandas dataframe before converting to tensorflow data, you can use the following methods:

- Identify outliers: Use statistical methods like Z-score or interquartile range (IQR) to detect outliers in your dataframe. You can then visualize them using box plots or scatter plots to further examine the data.

- Remove outliers: You can remove outliers from your dataset by filtering out values that fall outside a certain range or threshold. You can do this by using boolean indexing or by using the quantile method to remove values outside a certain percentile range.

- Replace outliers: Instead of removing outliers, you can also replace them with more appropriate values. For example, you can replace outliers with the median or mean of the feature, or you can use interpolation methods to estimate the missing values.

- Winsorization: Winsorization is a method that replaces extreme values with the nearest non-extreme value. You can set a certain threshold for outliers and replace values that exceed this threshold with the closest non-outlier value.

- Transformation: Transforming the data can also help in handling outliers. You can apply log transformations or other mathematical transformations to normalize the data and reduce the impact of outliers.

Once you have handled the outliers in your pandas dataframe, you can then proceed with converting the cleaned data to a tensorflow-compatible format, such as a numpy array or a tensorflow dataset object, for further analysis and modeling.

How to apply data augmentation techniques to a pandas dataframe before converting to tensorflow data?

To apply data augmentation techniques to a pandas dataframe before converting it to tensorflow data, you can follow these steps:

- Import the necessary libraries:

import pandas as pd import tensorflow as tf from tensorflow.keras.preprocessing.image import ImageDataGenerator

- Load your data into a pandas dataframe:

data = pd.read_csv('your_data.csv')

- Define your ImageDataGenerator with the desired data augmentation techniques:

datagen = ImageDataGenerator( rotation_range=20, width_shift_range=0.2, height_shift_range=0.2, shear_range=0.2, zoom_range=0.2, horizontal_flip=True, vertical_flip=True )

- Apply the data augmentation techniques to your dataframe:

augmented_data = [] for index, row in data.iterrows(): image = row['image_column_name'] # Assuming your dataframe has a column for images image = datagen.random_transform(image) augmented_data.append(image)

- Convert the augmented data to a numpy array and then to tensorflow data:

X = np.array(augmented_data) y = data['target_column'].values # Assuming your dataframe has a target column

dataset = tf.data.Dataset.from_tensor_slices((X, y))

Now you have applied data augmentation techniques to your pandas dataframe and converted it to tensorflow data. You can use this dataset for training your model.