Best Machine Learning Tools to Buy in February 2026

Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems

- MASTER ML PROJECTS END-TO-END WITH SCIKIT-LEARN'S GUIDANCE.

- EXPLORE DIVERSE MODELS: SVMS, TREES, AND ENSEMBLE METHODS INCLUDED!

- BUILD ADVANCED NEURAL NETS USING TENSORFLOW AND KERAS SEAMLESSLY.

Data Mining: Practical Machine Learning Tools and Techniques (Morgan Kaufmann Series in Data Management Systems)

- EXCLUSIVE OFFER: BE THE FIRST TO EXPERIENCE OUR NEW PRODUCT!

- LIMITED-TIME LAUNCH PROMOTION: SPECIAL PRICING AVAILABLE NOW!

- ENHANCED FEATURES AND IMPROVEMENTS DESIGNED FOR BETTER PERFORMANCE!

Mathematical Tools for Data Mining: Set Theory, Partial Orders, Combinatorics (Advanced Information and Knowledge Processing)

Learning Resources STEM Simple Machines Activity Set, Hands-on Science Activities, 19 Pieces, Ages 5+

- IGNITE CURIOSITY WITH HANDS-ON STEM TOOLS FOR YOUNG EXPLORERS!

- ENHANCE CRITICAL THINKING AND PROBLEM-SOLVING THROUGH FUN ACTIVITIES!

- DISCOVER REAL-WORLD APPLICATIONS OF SIMPLE MACHINES WITH ENGAGING PLAY!

Learning Resources Magnetic Addition Machine, Math Games, Classroom Supplies, Homeschool Supplies, 26 Pieces, Ages 4+

- BOOST MATH SKILLS WITH HANDS-ON COUNTING AND FINE MOTOR ACTIVITIES!

- MAGNETIC DESIGN EASILY ATTACHES TO SURFACES FOR DYNAMIC LEARNING FUN!

- ENGAGING 26-PIECE SET KEEPS KIDS EXCITED ABOUT LEARNING MATH CONCEPTS!

Designing Machine Learning Systems: An Iterative Process for Production-Ready Applications

Lakeshore Learning Materials Lakeshore Addition Machine Electronic Adapter

- LONG-LASTING AND EASY-TO-CLEAN DURABLE PLASTIC DESIGN.

- CONVENIENT ONE-HANDED OPERATION FOR INCREASED EFFICIENCY.

- COMPACT 9.5-INCH SIZE FOR SPACE-SAVING STORAGE.

Data Mining: Practical Machine Learning Tools and Techniques (The Morgan Kaufmann Series in Data Management Systems)

Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems

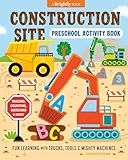

Construction Site Preschool Activity Book: Fun Learning with Trucks, Tools, and Mighty Machines (Preschool Activity Books)

To get percentage predictions for each class from TensorFlow, you can use the Softmax function on the output of your neural network model. This function will convert the raw output values into probabilities for each class. You can then multiply these probabilities by 100 to get the percentage prediction for each class. This is a common approach used in classification tasks to obtain a better understanding of the model's confidence in its predictions.

How to save and load a tensorflow model?

To save and load a TensorFlow model, you can use the save and load_model functions provided by TensorFlow's tf.keras API. Here's a step-by-step guide on how to save and load a TensorFlow model:

- Save the model:

import tensorflow as tf from tensorflow.keras.models import load_model

Build and compile your model

model = tf.keras.Sequential([ tf.keras.layers.Dense(64, activation='relu', input_shape=(100,)), tf.keras.layers.Dense(64, activation='relu'), tf.keras.layers.Dense(10, activation='softmax') ])

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

Train your model

model.fit(x_train, y_train, epochs=10)

Save your model

model.save('my_model.h5')

- Load the model:

import tensorflow as tf from tensorflow.keras.models import load_model

Load the model

loaded_model = load_model('my_model.h5')

Evaluate the loaded model

loaded_model.evaluate(x_test, y_test)

By following these steps, you can easily save and load your TensorFlow model for future use or deployment. Make sure to replace x_train, y_train, x_test, and y_test with your actual training and testing data. The .h5 format is used to save the model in HDF5 format, which is a commonly used format for storing deep learning models.

How to handle missing data in tensorflow?

There are several ways to handle missing data in a TensorFlow model:

- Imputation: Replace missing values with a certain value, such as the mean, median, or mode of the feature column.

- Drop missing values: Simply remove rows with missing data from the dataset.

- Create a separate category: If the missing values are categorical, you can create a separate category for them in the data.

- Predict missing values: If you have enough data and computational resources, you can train a model to predict the missing values based on the other features in the dataset.

- Use a special marker: Instead of imputing or dropping missing values, you can encode missing values with a special marker that the model can recognize.

Overall, the choice of handling missing data depends on the nature of the data and the specific modeling task at hand. Experiment with different approaches and evaluate the impact on the model performance.

How to visualize data using tensorflow?

To visualize data using TensorFlow, you can use the TensorBoard library which is an interactive visualization tool that can help you to visualize a wide range of data such as loss and accuracy metrics, histograms of weights and biases, and even images and audio data.

Here's a simple example of how you can use TensorBoard to visualize data in TensorFlow:

- First, install TensorBoard by running the following command:

pip install tensorboard

- Next, you can include the following code in your TensorFlow script to log data for visualization:

# Import the necessary libraries import tensorflow as tf

Create a summary writer

log_dir = "logs/" summary_writer = tf.summary.create_file_writer(log_dir)

Generate some data

data = tf.random.normal([1000])

Log the data to TensorBoard

with summary_writer.as_default(): tf.summary.histogram("Data", data, step=0)

- Finally, you can launch TensorBoard from the command line by navigating to the directory where your log files are stored and running the following command:

tensorboard --logdir=logs/

This will start a local server that you can access in your web browser to visualize the data logged in the script. You can also customize and add more visualizations using TensorBoard's APIs.